SafeSearch

When to use the SafeSearch Activity

As the very name suggests it enables you to detect evocative or provocative content, such as adult content, violent content, weapons, and visually disturbing content in image. Beyond flagging an image based on presence of unsafe content, Amazon Rekognition also returns a hierarchical list of labels with confidence scores, so as to filter images based on your requirements.

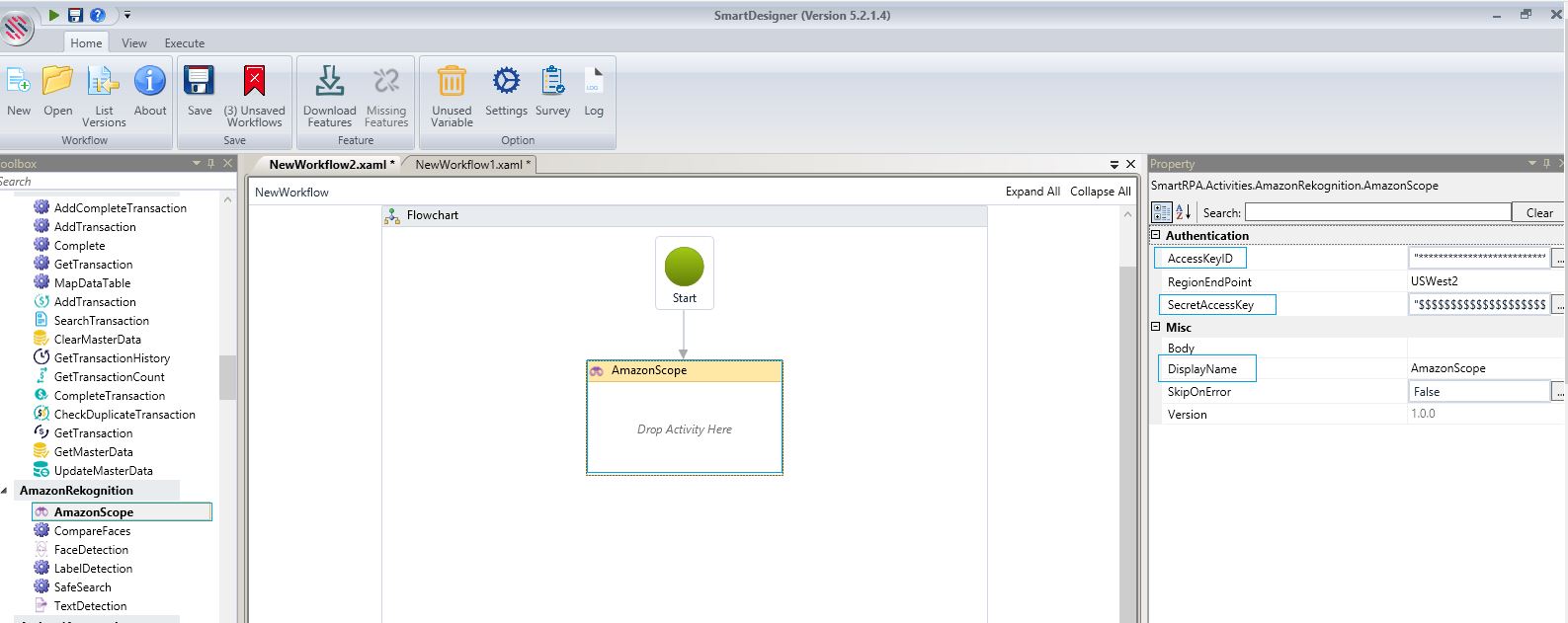

Figure 1

Drag and drop an AmazonScope drop zone from the AmazonRekognition package on the canvas. Proceed to provide the AccessKeyID and SecretAccessKey in the pertaining fields. Select the RegionEndPoint from the dropdown.

DisplayName will be auto populated for all activities.

Please Note: AWS AccessKeyID and AWS SecretAccessKey will be provided at the time of registration. This is specific to the Endpoint region, which is also selected at the time of registration.

Important Point: Do not share this unique key with anyone and this is not for public viewing as well.

Figure 2

Drag and drop a SafeSearch activity inside the AmazonScope drop zone.

Specify the local ImagePath to detect adult and racy content. Create a Variable in the Result field.

Drag and drop a WriteLog activity from the NotificationAutomation package. Provide the InputString and set the LogLevel.

Execute the workflow.

Figure 3

The provided image is as follows:

Figure 4

In the Output window, you can see that the label clearly states it is a violent scene with weapons.

Figure 5