Safe search

This activity helps us to detect evocative or provocative content, such as adult content, violent content, weapons, and visually disturbing content in image. Beyond flagging an image based on presence of unsafe content, Amazon Rekognition also returns a hierarchical list of labels with confidence scores, to filter images based on your requirements.

Technical Reference:

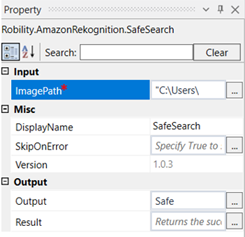

|

INPUT |

ImagePath: Specify the path of the image file that has to be processed. |

|

|

MISC |

Display Name: Displays the name of the activity. You can also customize the activity name to help troubleshoot issues faster. This name will be used for logging purposes. |

|

|

SkipOnError: It specifies whether to continue executing the workflow even if it throws an error. This supports only Boolean value “True or False”. By default, it is set to “False.” True: Continues the workflow to the next step False: Stops the workflow and throws an error. |

||

|

Version: It specifies the version of the AmazonRekognition feature in use |

||

|

OUTPUT |

Output: This is not a mandatory field. However, to see the confidence the scores, declare a variable here. |

|

|

Result: Declare a variable here to validate the activity. It accepts only Boolean value. This is not a mandatory field. |

*Mandatory field to execute the workflow

The following activity illustrates on how we can use the safe search activity to detect any adult or violent content from the given image.

Example:

1. Drag and drop an amazon scope activity.

2. Enter the access key ID,region end point

3. and the secret access key.

4. Drag and drop a safe search activity from the Amazon Rekognition feature within the amazon scope.

5. Click on the activity.

6. Enter the path of the image file that has to be processed.

7. Enter the declared variable in the output box. Here it is safe.

8. Drag and drop a writelog activity below the amazon scope.

9. Enter the above declared variable in the input string.

10. Enter the log level as “info”.

11. Execute the activity.

The bot executes the activity and gives the violence confidence score of the image in the output box.